( CCC 21C3, Berlin ~ 26 - 29 December 2004)

| Advanced internet searching strategies & "wizard seeking" tips by fravia+, 28 December 2004, Version 0.023 This file dwells @ http://www.searchlores.org/berlin2004.htm Introduction Scaletta of this session A glimpse of the web? Searching for disappeared sites Many rabbits out of the hat Slides Some reading material An assignment Nemo solus satis sapit {Bk:flange of myth } {rose.htm} |

This document is listing a palette of possible points to be discussed is my own in fieri contribution to the 21C3 ccc's event (December 2004, Berlin). The aim of this workshop is to give European hackers some "cosmic" searching power, because they will need it badly when (and if) they will wage battle against the powers that be. The ccc-friends in Berlin have insisted on a "paper" to be presented before the workshop, which isn't easy, since a lot of content may depend on the kind of audience I'll find: you never know, before, how much web-savvy (or clueless) the participants will be... usually you just realize it during (or after) a session. Hopefully, a European hacker congress will allow some (more) complex searching techniques to be discussed. Anyway the real workshop will probably differ a lot from this list of points, techniques and aspects of web-searching that need to be explained -again and again- if we want people to understand that seeking encompasses MUCH MORE than just using the main search engines ā la google, fast or inktomi with "one-word" simple queries. I have kept this document on a rather schematic plane: readers will at least be able to read this file before the workshop itself,which may prove useful: in fact there are various things to digest even during such a short session, and many lore will remain uncovered. The aim is anyway to point readers towards non-commercial working approaches and possible solutions; above all, to enable them to find more (sound) material by themselves on the deep web of knowledge. If you learn to search the web well, you won't need nobody's workshops anymore :-) Keep an eye on this URL, especially if you do not manage to come to Berlin... It may even get updated :-) |

Introduction

I'll try my best, today, to give you some cosmic power. And I mean this "im ernst".

In fact everything (that can be digitized) is on the web, albeit often buried under tons of commercial crap. And if you are able to find, for free, whatever you're looking for, you have considerable power.

The amount of information you can now gather on the web is truly staggering.

Let's see... how many fairy tales do you think human beings have ever written since the dawn of human culture?

How many songs has our race sung?

How many pictures have humans drawn?

How many books in how many languages have been drafted and published on our planet?

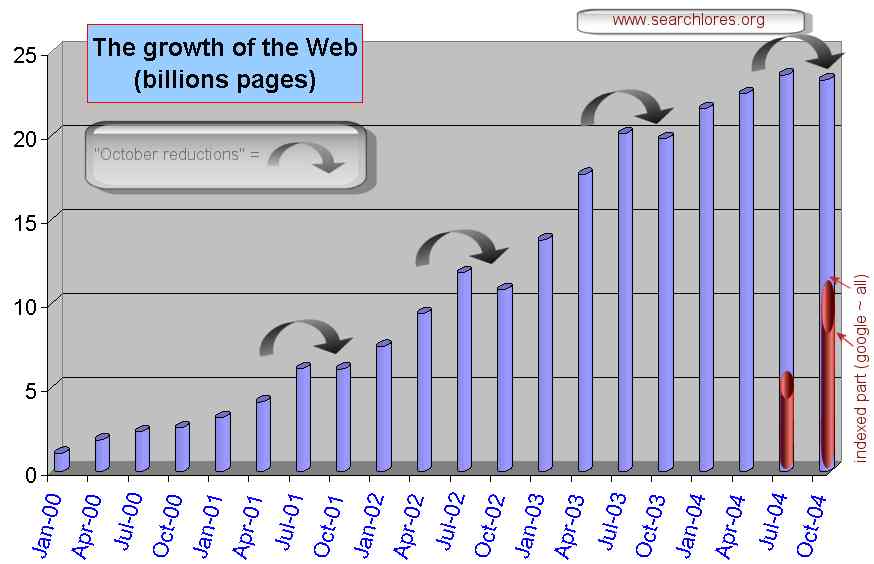

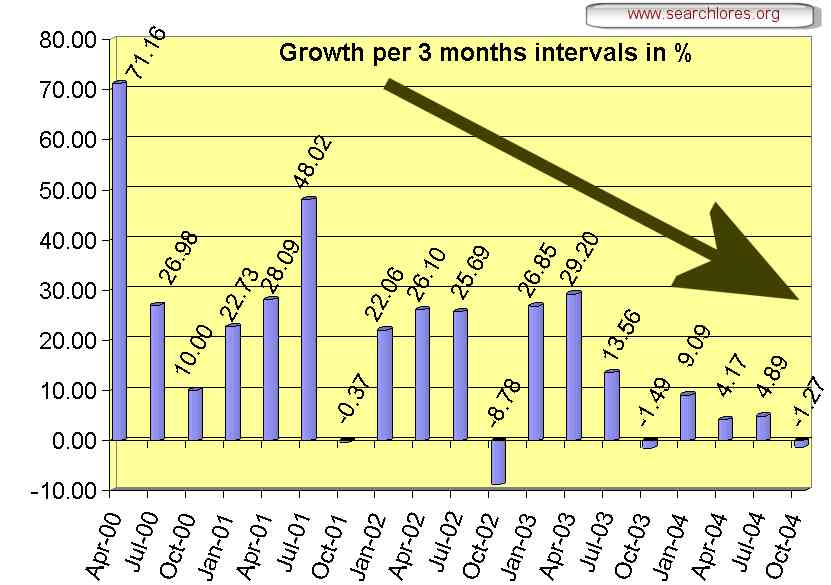

The Web is deep! "While I am counting to five" hundredthousands of new images, books, musics and software programs will be uploaded on the web (...and millions will be downloaded :-) ONE, TWO, THREE, FOUR, FIVE

The mind shudders, eh?

The knowledge of the human race is at your disposal!

It is there for the take! Every book, picture, film, document, newspaper that has been written, painted, created by the human race is out there somewhere in extenso, with some exceptions that only confirm this rule.

But there are even more important goodies than "media products" out there.

On the web there are SOLUTIONS! WORKING solutions! Imagine you are confronted with some task, imagine you have to solve a software or configuration problem in your laptop, for instance, or you have to defend yourself from some authority's wrongdoing, say you want to stop those noisy planes flying over your town... simply imagine you are seeking a solution, doesn't matter a solution to what, įa c'est égale.

Well, you can bet: the solution to your task or problem is there on the web, somewhere.

Actually you'll probably find MORE THAN ONE solution to your current problem, and maybe you'll be later able to build on what you'll have found, collate the different solutions and even develop another, different, approach, that will afterwards be on the web as well. For ever.

The web was made FOR SHARING knowledge, not for selling nor for hoarding it, and despite the heavy commercialisation of the web, its very STRUCTURE is -still- a structure for sharing. That's the reason seekers can always find whatever they want, wherever it may have been placed or hidden. Incidentally that is also the reason why no database on earth will ever be able to deny us entry :-)

Once you learn how to search the web, how to find quickly what you are looking for and -quite important- how to evaluate the results of your queries, you'll de facto grow as different from the rest of the human beings as cro-magnon and neanderthal were once upon a time.

"The rest of the human beings"... you know... those guys that happily use microsoft explorer as a browser, enjoy useless flash presentations, browse drowning among pop up windows, surf without any proxies whatsoever and hence smear all their personal data around gathering all possible nasty spywares and trojans on the way.

I am confident that many among you will gain, at the end of this lecture, either a good understanding of some effective web-searching techniques or, at least (and that amounts to the same in my eyes), the capacity to FIND quickly on the web all available sound knowledge related to said effective web-searching techniques :-)

If you learn how to search, the global knowledge of the human race is at your disposal

Do not forget it for a minute. Never in the history of our race have humans had, before, such mighty knowledge chances.

You may sit in your Webcafé in Berlin or in your university of Timbuctou, you may work in your home in Lissabon or study in a small school of the Faröer islands... you'll de facto be able to zap FOR FREE the SAME (and HUGE) amount of resources as -say- a student in Oxford or Cambridge... as far as you are able to find and evaluate your targets.

Very recently Google has announced its 'library' project: the libraries involved include those of the universities of Harvard, Oxford, Michigan and Stanford and the New York Public Library.

Harvard alone has some 15 million books, collected over four centuries. Oxfords Bodleian Library has 5 million books, selected over five centuries. The proposed system will form a new "Alexandria", holding what must be close to the sum of all human knowledge.

Today we'll investigate together various different aspects of "the noble art of searching": inter alia how to search for anything, from "frivolous" mp3s, games, pictures or complete newspapers collections, to more serious targets like software, laws, books or hidden documents... we'll also briefly see how to bypass censorship, how to enter closed databases... but in just one hour you'll probably only fathom the existence of the abyss, not its real depth and width.

Should you find our searching techniques (which seem amazing simple "a posteriori") interesting and worth delving into, be warned: behind their apparent simplicity a high mountain of knowledge and lore awaits you, its magnificient peaks well beyond the clouds.

I'll just show you, now, where the different paths begin. Should you really take them, you'll have to walk. A lot.

Scaletta of this Session

Examples of "web-multidepth"

"I've heard legends about information that's supposedly "not online", but have never managed to locate any myself. I've concluded that this is merely a rationalization for inadequate search skills. Poor searchers can't find some piece of information and so they conclude it's 'not online"

The depth and quantity of information available on the web, once you peel off the stale and useless commercial crusts, is truly staggering. Here just some examples, that I could multiply "ad abundantiam", intended to give you "a taste" of the deep depths and currents of the web of knowledge...